졸업작품을 진행하며 남긴 기록들을 블로그로 옮긴 글입니다. 따라서 블로그에는 졸업작품을 완성하기 위해 적용한 글들만 옮기려고 합니다.

전체적인 내용을 확인하시려면 아래 링크로 이동해주세요 !

1. 활성화 함수만 변경하며 실험한 이유

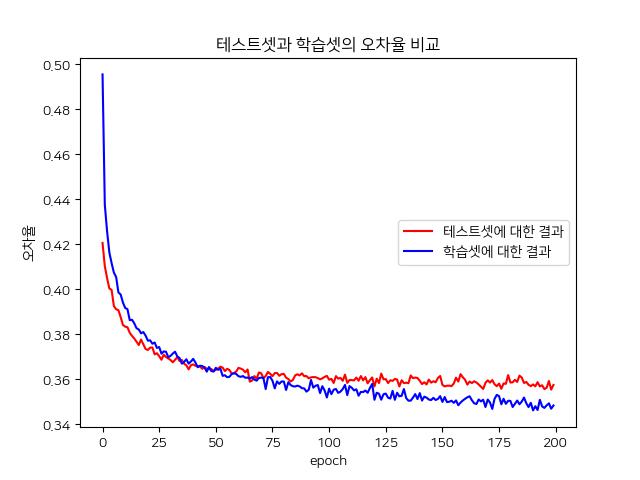

근로를 하면서 수집한 PPG 데이터 28개로 학습할 때는 모델의 정확도가 0.9 정도 나왔다. 그러나 내가 100회 수집한 PPG 데이터를 적용하니 정확도가 0.8 정도로 떨어졌다. 물론 학습에 사용되는 데이터의 양이 커져서 그런걸 수도 있지만, 호기심에 relu-relu-sigmoid와 sigmoid-sigmoid-sigmoid를 비교해보기로 했다.

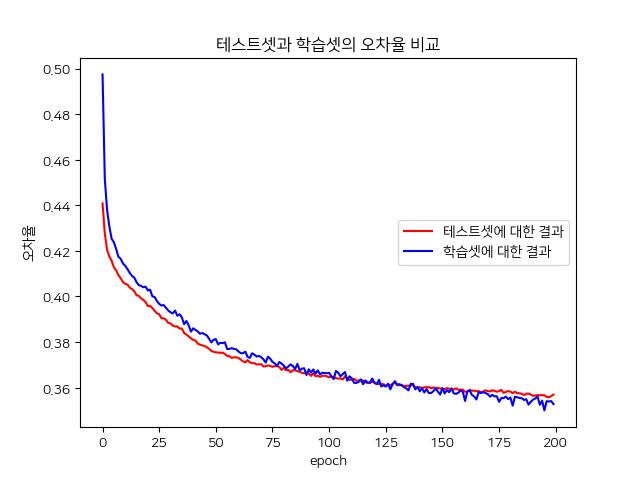

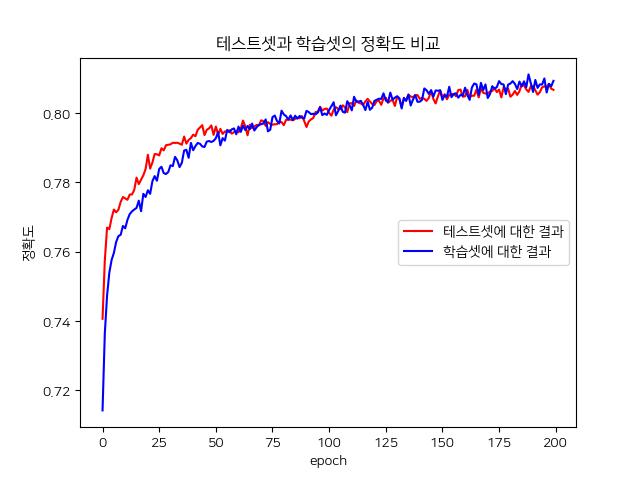

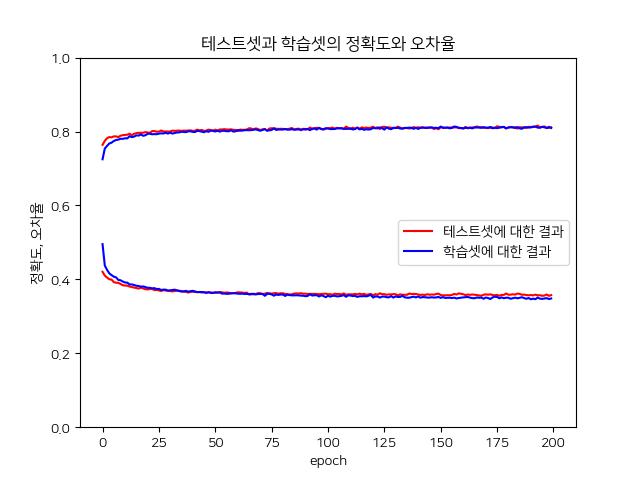

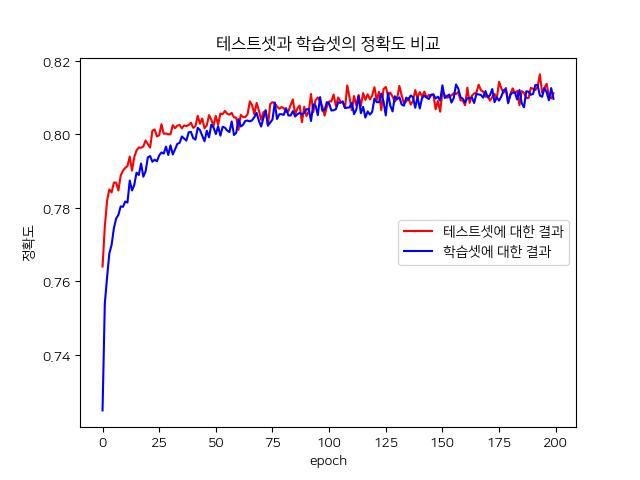

sigmoid 함수는 오차역전파를 할때 sigmoid 함수를 미분하면 0에 수렴하기 때문에, 당연하게도 sigmoid만으로 학습한 모델은 정확도가 떨어져야 정상이다. 그러나 결과는 그렇지 않았다. 오히려 정확도-오차 그래프도 relu-relu-sigmoid 모델보다 train data와 test data가 일치하는 모습을 보여줬다.

왜 그런지는 잘 모르겠다. 물론 데이터가 편향되었을 가능성, 데이터의 양이 부족해서 발생했을 가능성 등이 있지만.. 아쉽게도 PPG 신호를 측정하기 위해 사용했던 환자 감시 장치를 반납했기 때문에 실험을 진행할 수 없다.

A. 모델

a. Input Data

- PPG (128개)

- 키의 Gray Code (8개)

- 몸무게의 Gray Code (8개)

b. Output Data

- 이완기 혈압의 Gray Code (8개)

- 수축기 혈압의 Gray Code (8개)

c. Modeling

epoch = 200

batch_size = 5

model.add(tf.keras.layers.Dense(64, input_dim=144, activation='relu'))

model.add(tf.keras.layers.Dropout(0.4))

model.add(tf.keras.layers.Dense(64, activation='relu'))

model.add(tf.keras.layers.Dropout(0.4))

model.add(tf.keras.layers.Dense(16, activation='sigmoid'))

model.compile(loss='binary_crossentropy',

optimizer='adam',

metrics=['accuracy'])

history = model.fit(X_train, Y_train, epochs=epoch, batch_size=batch_size, validation_data=(X_test, Y_test))

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense (Dense) (None, 64) 9280

_________________________________________________________________

dropout (Dropout) (None, 64) 0

_________________________________________________________________

dense_1 (Dense) (None, 64) 4160

_________________________________________________________________

dropout_1 (Dropout) (None, 64) 0

_________________________________________________________________

dense_2 (Dense) (None, 16) 1040

=================================================================

Total params: 14,480

Trainable params: 14,480

Non-trainable params: 0

_________________________________________________________________

d. learning

5/2182 [..............................] - ETA: 0s - loss: 0.3980 - accuracy: 0.8250

385/2182 [====>.........................] - ETA: 0s - loss: 0.3507 - accuracy: 0.8037

770/2182 [=========>....................] - ETA: 0s - loss: 0.3473 - accuracy: 0.8092

1160/2182 [==============>...............] - ETA: 0s - loss: 0.3486 - accuracy: 0.8095

1545/2182 [====================>.........] - ETA: 0s - loss: 0.3506 - accuracy: 0.8080

1930/2182 [=========================>....] - ETA: 0s - loss: 0.3478 - accuracy: 0.8101

2182/2182 [==============================] - 0s 175us/sample - loss: 0.3492 - accuracy: 0.8093 - val_loss: 0.3591 - val_accuracy: 0.8103

Epoch 199/200

5/2182 [..............................] - ETA: 0s - loss: 0.2122 - accuracy: 0.9000

395/2182 [====>.........................] - ETA: 0s - loss: 0.3446 - accuracy: 0.8149

785/2182 [=========>....................] - ETA: 0s - loss: 0.3425 - accuracy: 0.8154

1175/2182 [===============>..............] - ETA: 0s - loss: 0.3458 - accuracy: 0.8139

1570/2182 [====================>.........] - ETA: 0s - loss: 0.3461 - accuracy: 0.8132

1955/2182 [=========================>....] - ETA: 0s - loss: 0.3465 - accuracy: 0.8127

2182/2182 [==============================] - 0s 173us/sample - loss: 0.3468 - accuracy: 0.8126 - val_loss: 0.3553 - val_accuracy: 0.8098

e. prediction to test Data

- test_data[0]에 대한 X값, Y값, prediction값

X_test[1] :

[[ 5.25969345e-01 5.12297470e-01 4.73234970e-01 4.13664657e-01

3.38469345e-01 2.54484970e-01 1.66594345e-01 8.26099700e-02

6.43809500e-03 -5.80150300e-02 -1.06843155e-01 -1.40046280e-01

-1.57624405e-01 -1.61530655e-01 -1.53718155e-01 -1.36140030e-01

-1.15632218e-01 -9.31712800e-02 -7.36400300e-02 -5.99681550e-02

-5.50853430e-02 -6.19212800e-02 -7.94994050e-02 -1.09772843e-01

-1.51765030e-01 -2.04499405e-01 -2.64069718e-01 -3.28522843e-01

-3.93952530e-01 -4.55475968e-01 -5.08210343e-01 -5.47272843e-01

-5.68757218e-01 -5.68757218e-01 -5.46296280e-01 -4.98444718e-01

-4.27155655e-01 -3.34382218e-01 -2.24030655e-01 -1.02936905e-01

2.30396570e-02 1.47063095e-01 2.61320907e-01 3.59953720e-01

4.35149032e-01 4.84953720e-01 5.05461532e-01 4.98625595e-01

4.64445907e-01 4.06828720e-01 3.32609970e-01 2.46672470e-01

1.55852157e-01 6.79615320e-02 -1.21165930e-02 -7.94994050e-02

-1.31257218e-01 -1.64460343e-01 -1.79108780e-01 -1.77155655e-01

-1.61530655e-01 -1.35163468e-01 -1.03913468e-01 -7.07103430e-02

-4.04369050e-02 -1.89525300e-02 -8.21034300e-03 -1.11400300e-02

-2.87181550e-02 -6.19212800e-02 -1.07819718e-01 -1.65436905e-01

-2.29890030e-01 -2.97272843e-01 -3.61725968e-01 -4.18366593e-01

-4.62311905e-01 -4.86725968e-01 -4.90632218e-01 -4.68171280e-01

-4.21296280e-01 -3.49030655e-01 -2.55280655e-01 -1.43952530e-01

-1.99290930e-02 1.08000595e-01 2.33977157e-01 3.49211532e-01

4.46867782e-01 5.22063095e-01 5.69914657e-01 5.89445907e-01

5.79680282e-01 5.42570907e-01 4.83000595e-01 4.05852157e-01

3.17961532e-01 2.25188095e-01 1.35344345e-01 5.33130950e-02

-1.60228430e-02 -6.87572180e-02 -1.02936905e-01 -1.19538468e-01

-1.18561905e-01 -1.04890030e-01 -8.14525300e-02 -5.41087800e-02

-2.67650300e-02 -4.30409300e-03 8.39122000e-03 9.36778200e-03

-5.28065500e-03 -3.55540930e-02 -8.04759680e-02 -1.37116593e-01

-2.01569718e-01 -2.70905655e-01 -3.37311905e-01 -3.95905655e-01

-4.41804093e-01 -4.68171280e-01 -4.72077530e-01 -4.50593155e-01

-4.02741593e-01 -3.30475968e-01 -2.34772843e-01 -1.23444718e-01

1.00000000e+00 1.00000000e+00 1.00000000e+00 1.00000000e+00

1.00000000e+00 0.00000000e+00 0.00000000e+00 1.00000000e+00

0.00000000e+00 0.00000000e+00 1.00000000e+00 0.00000000e+00

0.00000000e+00 0.00000000e+00 0.00000000e+00 0.00000000e+00]

====Y 값====

BP_D_Y_dec 76

BP_S_Y_dec 117

====predict 값====

BP_D_dec 74

BP_S_dec 121

=======================================================================================================] - 0s 19us/sample - loss: 0.2293 - accuracy: 0.8997

정확도 : 0.81116456

오차 : 0.35738069226599145

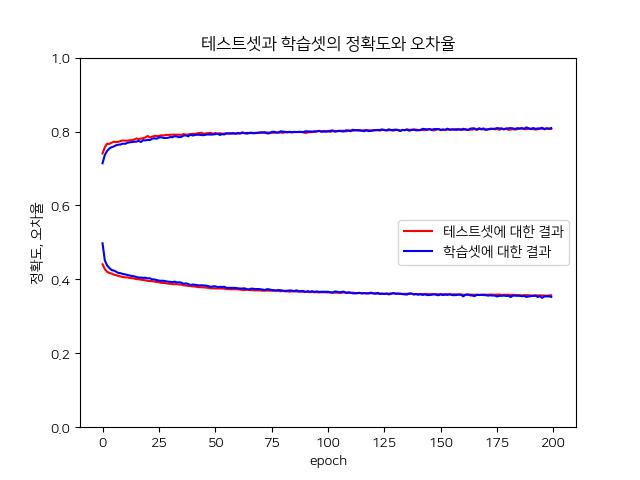

f. loss & accuracy graph

B. 모델

a. Input Data

- PPG (128개)

- 키의 Gray Code (8개)

- 몸무게의 Gray Code (8개)

b. Output Data

- 이완기 혈압의 Gray Code (8개)

- 수축기 혈압의 Gray Code (8개)

c. Modeling

epoch = 200

batch_size = 5

model.add(tf.keras.layers.Dense(64, input_dim=144, activation='sigmoid'))

model.add(tf.keras.layers.Dropout(0.4))

model.add(tf.keras.layers.Dense(64, activation='sigmoid'))

model.add(tf.keras.layers.Dropout(0.4))

model.add(tf.keras.layers.Dense(16, activation='sigmoid'))

model.compile(loss='binary_crossentropy',

optimizer='adam',

metrics=['accuracy'])

history = model.fit(X_train, Y_train, epochs=epoch, batch_size=batch_size, validation_data=(X_test, Y_test))

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense (Dense) (None, 64) 9280

_________________________________________________________________

dropout (Dropout) (None, 64) 0

_________________________________________________________________

dense_1 (Dense) (None, 64) 4160

_________________________________________________________________

dropout_1 (Dropout) (None, 64) 0

_________________________________________________________________

dense_2 (Dense) (None, 16) 1040

=================================================================

Total params: 14,480

Trainable params: 14,480

Non-trainable params: 0

_________________________________________________________________

d. learning

5/2182 [..............................] - ETA: 0s - loss: 0.3132 - accuracy: 0.9000

385/2182 [====>.........................] - ETA: 0s - loss: 0.3502 - accuracy: 0.8109

770/2182 [=========>....................] - ETA: 0s - loss: 0.3516 - accuracy: 0.8088

1160/2182 [==============>...............] - ETA: 0s - loss: 0.3550 - accuracy: 0.8056

1545/2182 [====================>.........] - ETA: 0s - loss: 0.3538 - accuracy: 0.8067

1930/2182 [=========================>....] - ETA: 0s - loss: 0.3545 - accuracy: 0.8070

2182/2182 [==============================] - 0s 176us/sample - loss: 0.3541 - accuracy: 0.8078 - val_loss: 0.3563 - val_accuracy: 0.8071

Epoch 200/200

5/2182 [..............................] - ETA: 0s - loss: 0.2853 - accuracy: 0.8625

385/2182 [====>.........................] - ETA: 0s - loss: 0.3538 - accuracy: 0.8071

770/2182 [=========>....................] - ETA: 0s - loss: 0.3523 - accuracy: 0.8084

1155/2182 [==============>...............] - ETA: 0s - loss: 0.3488 - accuracy: 0.8124

1540/2182 [====================>.........] - ETA: 0s - loss: 0.3530 - accuracy: 0.8091

1915/2182 [=========================>....] - ETA: 0s - loss: 0.3527 - accuracy: 0.8095

2182/2182 [==============================] - 0s 176us/sample - loss: 0.3528 - accuracy: 0.8093 - val_loss: 0.3569 - val_accuracy: 0.8068

e. prediction to test Data

- test_data[0]에 대한 X값, Y값, prediction값

X_test[1] :

[[ 5.25969345e-01 5.12297470e-01 4.73234970e-01 4.13664657e-01

3.38469345e-01 2.54484970e-01 1.66594345e-01 8.26099700e-02

6.43809500e-03 -5.80150300e-02 -1.06843155e-01 -1.40046280e-01

-1.57624405e-01 -1.61530655e-01 -1.53718155e-01 -1.36140030e-01

-1.15632218e-01 -9.31712800e-02 -7.36400300e-02 -5.99681550e-02

-5.50853430e-02 -6.19212800e-02 -7.94994050e-02 -1.09772843e-01

-1.51765030e-01 -2.04499405e-01 -2.64069718e-01 -3.28522843e-01

-3.93952530e-01 -4.55475968e-01 -5.08210343e-01 -5.47272843e-01

-5.68757218e-01 -5.68757218e-01 -5.46296280e-01 -4.98444718e-01

-4.27155655e-01 -3.34382218e-01 -2.24030655e-01 -1.02936905e-01

2.30396570e-02 1.47063095e-01 2.61320907e-01 3.59953720e-01

4.35149032e-01 4.84953720e-01 5.05461532e-01 4.98625595e-01

4.64445907e-01 4.06828720e-01 3.32609970e-01 2.46672470e-01

1.55852157e-01 6.79615320e-02 -1.21165930e-02 -7.94994050e-02

-1.31257218e-01 -1.64460343e-01 -1.79108780e-01 -1.77155655e-01

-1.61530655e-01 -1.35163468e-01 -1.03913468e-01 -7.07103430e-02

-4.04369050e-02 -1.89525300e-02 -8.21034300e-03 -1.11400300e-02

-2.87181550e-02 -6.19212800e-02 -1.07819718e-01 -1.65436905e-01

-2.29890030e-01 -2.97272843e-01 -3.61725968e-01 -4.18366593e-01

-4.62311905e-01 -4.86725968e-01 -4.90632218e-01 -4.68171280e-01

-4.21296280e-01 -3.49030655e-01 -2.55280655e-01 -1.43952530e-01

-1.99290930e-02 1.08000595e-01 2.33977157e-01 3.49211532e-01

4.46867782e-01 5.22063095e-01 5.69914657e-01 5.89445907e-01

5.79680282e-01 5.42570907e-01 4.83000595e-01 4.05852157e-01

3.17961532e-01 2.25188095e-01 1.35344345e-01 5.33130950e-02

-1.60228430e-02 -6.87572180e-02 -1.02936905e-01 -1.19538468e-01

-1.18561905e-01 -1.04890030e-01 -8.14525300e-02 -5.41087800e-02

-2.67650300e-02 -4.30409300e-03 8.39122000e-03 9.36778200e-03

-5.28065500e-03 -3.55540930e-02 -8.04759680e-02 -1.37116593e-01

-2.01569718e-01 -2.70905655e-01 -3.37311905e-01 -3.95905655e-01

-4.41804093e-01 -4.68171280e-01 -4.72077530e-01 -4.50593155e-01

-4.02741593e-01 -3.30475968e-01 -2.34772843e-01 -1.23444718e-01

1.00000000e+00 1.00000000e+00 1.00000000e+00 1.00000000e+00

1.00000000e+00 0.00000000e+00 0.00000000e+00 1.00000000e+00

0.00000000e+00 0.00000000e+00 1.00000000e+00 0.00000000e+00

0.00000000e+00 0.00000000e+00 0.00000000e+00 0.00000000e+00]

====Y 값====

BP_D_Y_dec 76

BP_S_Y_dec 117

====predict 값====

BP_D_dec 74

BP_S_dec 121

=======================================================================================================] - 0s 19us/sample - loss: 0.2293 - accuracy: 0.8997

정확도 : 0.80675745

오차 : 0.3569375118638715

f. loss & accuracy graph